It's intriguing that the recent EEF effective PD meta-analysis was accompanied by a comprehensive suite of guidance, downloads and checklists for schools, but nothing was provided for the education research community. Most education research, if you boil it down, concerns measuring the impact of teachers doing things differently, so you’d think anyone involved in education research would be acutely interested in the quality of PD. Sadly, it's rarely given the emphasis it deserves within theories of change, programme delivery or evaluation models. This is deeply problematic.

Why is this a problem? A rocket on a launchpad is a good way to think of it. The rocket is the PD programme, a vehicle with one purpose: to get the satellite (a new pedagogy) into orbit (routine classroom use). Imagine the rocket launches and disappears from sight, but sometime later the satellite fails to communicate with ground control. In these circumstances, should we conclude the satellite was faulty or should we be equally concerned that the rocket failed?

How would ground control go about diagnosing the cause of their woes? First, they would check the telemetry to see if any crucial rocket mechanisms had failed. Then they’d observe to see if that satellite had actually reached orbit. Only once we had eliminated all of the other potential causes of failure could they reasonably draw conclusions about the efficacy of the satellite.

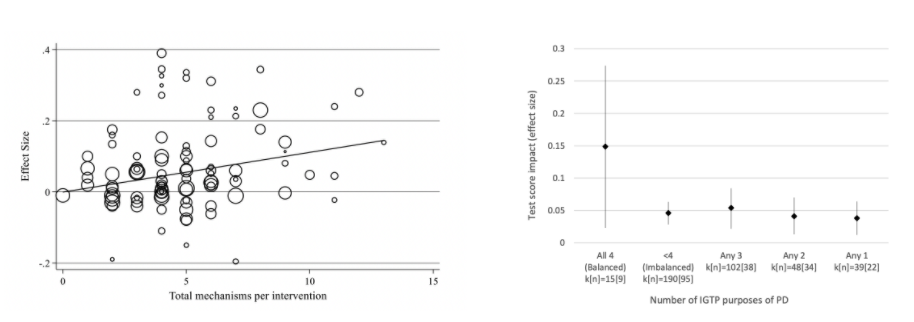

The main findings of the EEF PD meta-analysis were that balanced PD design and a range of effective learning mechanisms matter in terms of educational outcomes. This effect appears to be noticeable within programmes with similar PD content. This begs the question: in low performing studies, did the instructional strategy fail because it lacked merit or did teachers fail to implement the strategy because the PD was poor?

The importance of this challenge is thrown into sharp relief by the favoured research model of organisations like the EEF.

There’s much to be said for the randomised control trial (RCT). It took this kind of rigor to see off quackery in mainstream medicine. In time, it will do the same in education. However, there appear to be important differences in prevailing educational and medical approaches. Medical research is acutely interested in measures “upstream” of mortality / morbidity data. Was the vaccine administered? What dosage? Did it cause a negative reaction? Did antibodies get formed? In what proportion of people? How long did immunity last? And YES, finally, did less people get ill?

Measuring upstream variables provides both summative and formative value. These measurements don't just indicate whether the vaccine should be administered at scale, but also tell us how, when and to whom. If research shows the vaccine shouldn't be rolled out, then the evaluation provides clear data on how to refine it. In this way, a vaccine which was highly efficacious when administered in a certain way to a certain group doesn't get missed. To get this level of insight in education we need to care about more than just the health of the patient. A point eloquently argued here by Thomas Guskey.

While it’s true education RCTs tend to be focused on outcome measures like SATS results, it’s too simplistic to suggest that education research is only interested in these measures. Looking at the EEF’s own research, it's clear that efforts are made to identify PD-related challenges. If recruitment and implementation issues were widespread, researchers will often caveat their findings with this information and potentially alter the “confidence” of their effect size. Ultimately though, the caveats just serve as footnotes to the reported effect size. Sometimes this reporting and subsequent dialogue within the broader education community is naive and makes little reference to the caveats, leading to confusion and misconceptions in schools.

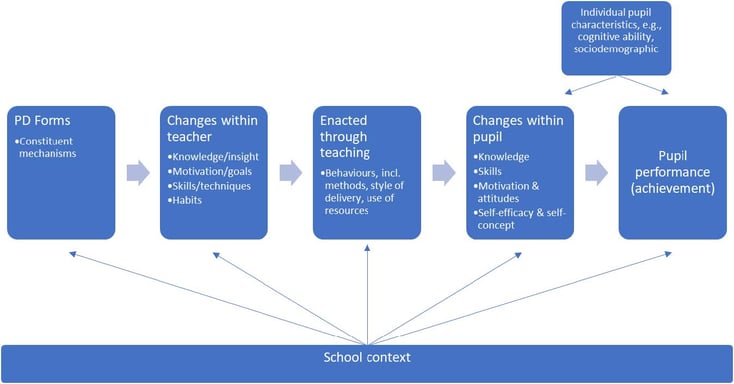

When reviewing educational RCTs it's rare to see a report with a theory of change which clearly accounts for the enactment of pedagogy via behaviour change, as per the model discussed in the EEF meta analysis.

It is rarer still to find studies which seek to quantify behaviour change or alignment. In a nutshell, educational research rarely treats behavioural and attitudinal change as independent variables and consequently misses the opportunity to regress this data against educational outcomes. There are obvious dangers with this omission.

What if, due to poor PD, only 30% of teachers implement with fidelity? And what if their students made significant gains? Could educational research inadvertently trash the most powerful pedagogy yet studied as the starkly positive results in a minority of learners were ‘washed out’ by the mean? Researchers may see outlier outcomes in the data, but if they had not measured behaviour change, they’d have no tools to further interpret the data.

There's a secondary, albeit equally important, concern about the structure of research being advanced currently. The failure to distinguish between the PD programme and the pedagogical content in the evaluation means we have trouble studying PD programmes themselves, which, given their importance, is a big problem. On a number of occasions the EEF has sought to evaluate PD programmes such as lesson study or peer observation solely in terms of terminal outcomes; in both cases little or no effect was found. Sadly, we are left none the wiser about the efficacy of the PD, which might have been very effective in getting an ineffective pedagogy into practice.

There are a couple of obvious counter arguments to the overall point being advanced. One which has been articulated a number of times is “there are educational strategies which appear to be effective in terms of student learning AND appear to be easily implemented by teachers. Shouldn’t we just take the easy path?” On the surface this seems reasonable, however, within this statement is the inherent acceptance that while more optimised strategies may exist, we shouldn't bother if they are hard. If we wouldn’t accept that attitude from a student, we shouldn't adopt it ourselves. It’s also a perspective born of the frustrations of repeated PD failure, of a time when we didn't have a working definition of good PD. We do now.

Another objection is the complication and cost of research methods which quantify behavioural change at scale. Historically, programs which sought to answer this question had to employ and train large numbers of observers to visit schools and check implementation fidelity. This concern misses the enormous advances in digital technology which have been made in the last 10 years.

We have worked on numerous projects with research partners such as Mathematica Policy Research and the American Institutes for Research to securely and remotely collect thousands of hours of video and then enable them to encode observed practice against behavioural rubrics via our digital platform. These projects enabled the monitoring of inter-rater reliability, training quality and classroom delivery at a fraction of the cost of doing this in a more traditional way.

When the PD associated with the intervention is also hosted on our platform, we can also provide all the effective mechanisms as outlined in the EEF report and measure the extent to which teachers engaged with them. While there may be additional costs associated with the analysis of video data, much of this is countered with an overall reduction in the cost of delivery. Even if a research programme did not want to use our platform, this functionality exists in different ways on different tools and has done for over a decade.

Ultimately, our message is this: it’s only by going inside the most important “black box” in education, that of behaviour change, that we will gain the formative data needed to make sustained progress. IRIS Connect have a long history of working in this domain and are keen to support any research initiative interested in quality assuring the PD associated with its work and measuring the extent to which it leads to concrete changes to classroom practice.

With this in mind, we will shortly release a PD quality assurance tool to support programme developers to ensure alignment with an evidence-based framework and to measure the extent to which programme participants go on to experience effective PD. It will also include additional guidance about how to use our Forms tools to code teaching and learning against programme objectives and measure behavioural change.

Please click here to register your interest.

Leave a comment:

Get blog notifications

Keep up to date with our latest professional learning blogs.